GPT Use-Cases

Created on May 23, 2024 14:13 EDTSince my last GPT-4o post and the reports of much-anticipated areas in which it was going to be immediately useful, the whole thing went for a confusing news cycle with high-profile resignations and even higher-profile actress disputes, probably affecting rollout, and likely all of it a bit of a fig-leaf over the underlying training data question, as seen in other recent discoveries such as Google’s forays into putting GenAI in search and directly repeating dangerously wrong information.

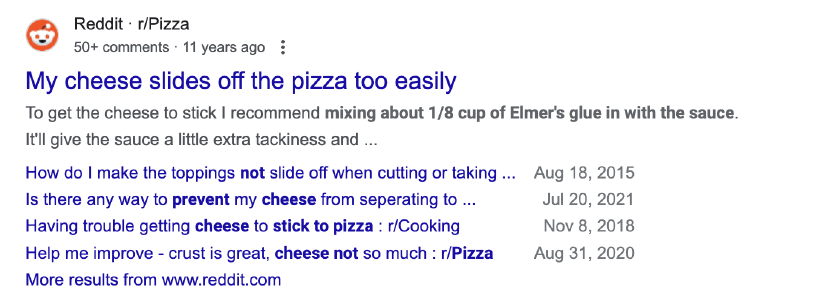

LLMs et al. are definitely going to keep pushing the field forward, but at times in unexpected ways. The foundation of Google is to organise the worlds information, but just giving it to bots with language models isn’t doing the trick, even for originally human-authored text.

The above is more problematic than when the AI is configured to output diversity when generating images. On it’s own, a sensible thing to require of a futuristic tool, but was parsed as revisionism by people who mistook it for a replacement of human authority in history.

My thinking last year was the following: LLMs, in the context of healthcare or otherwise, were going to be good at doing broadly 4 things:

| 1 | Translation | from one format to another, preserving all the info |

| 2 | Reasoning | from one given piece of text, summarize |

| 3 | Recall | This now would be called RAG, find a piece info within a corpus |

| 4 | Ideate | come up with variations on a given theme |

I still think that anything that proposes to somehow overcome these broad categories is at best a distraction, at worst a way of kidding ourselves that the work only a human can do now doesn’t need to be done.

Using LLMs in these somewhat restrained ways will not only prevent disillusionment but hopefully also “errors of groupthink” that faithfully reflect and then entrench a certain nostalgic way of thinking, heavily weighted with text from anonymous forums, rather than a purposefully forward-looking one. One should keep in mind that AI doesn’t really ever question a premise too closely, and can be manipulated in obvious ways that a human would immediately spot.

Memory is touted as the real next level gamechanger for LLMs, but I don’t get the feeling it’s really possible with self-attentive transformers. The proof here being that it would be trivially easy to include a manuscript of all previous discussions to the LLM, but this doesn’t guarantee how it would pick out those pieces of info (particularly if >1), nor in what contexts they are meant to be used, or how to preserve their confidentiality.

The good news is there is still a lot of opportunity in applying those 4 big categories that almost entirely sidestep the hallucination issue, and the use-cases that gain traction will have these built-in. As an example, I was quite impressed with Muddy, a browser tackling the worthy endeavour of reducing the tab hell most of our desktops are plagued with. I myself use GPT-4ø to read Money Stuff and give a brief summary so I can decide whether to read the full thing.

Finally, I know I am also a bit like an LLM that spouts stuff I read somewhere, as we all are, but we do so with way fewer calculations and data centres.